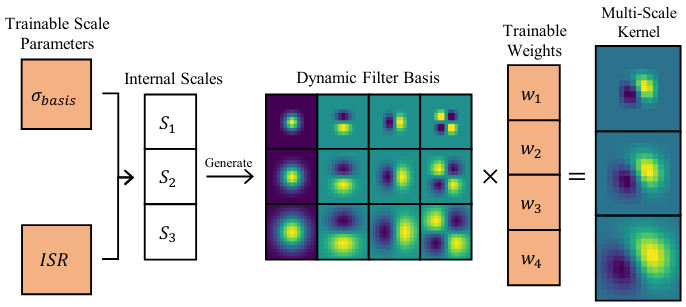

Dynamic Multi-Scale Kernel generation pipeline. Filter basis is parameterised by a discrete set of scales which in turn are generated from learnable parameters, controlling both the size of the first scale “σ basis” and the range the internal scales span (ISR). Linear combination of the Dynamic Filter Basis functions with trainable weights form MultiScale Kernel.

Dynamic Multi-Scale Kernel generation pipeline. Filter basis is parameterised by a discrete set of scales which in turn are generated from learnable parameters, controlling both the size of the first scale “σ basis” and the range the internal scales span (ISR). Linear combination of the Dynamic Filter Basis functions with trainable weights form MultiScale Kernel.

Scale Learning in Scale-Equivariant Convolutional Networks

Mark Basting, Robert-Jan Bruintjes, Thaddäus Wiedemer, Matthias Kümmerer, Matthias Bethge, Jan van GemertProceedings of the 19th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications (VISAPP 2024)

Objects can take up an arbitrary number of pixels in an image: Objects come in different sizes, and, photographs of these objects may be taken at various distances to the camera. These pixel size variations are problematic for CNNs, causing them to learn separate filters for scaled variants of the same objects which prevents learning across scales. This is addressed by scale-equivariant approaches that share features across a set of pre-determined fixed internal scales. These works, however, give little information about how to best choose the internal scales when the underlying distribution of sizes, or scale distribution, in the dataset, is unknown. In this work we investigate learning the internal scales distribution in scale-equivariant CNNs, allowing them to adapt to unknown data scale distributions. We show that our method can learn the internal scales on various data scale distributions and can adapt the internal scales in current scale-equivariant approaches.

Bibtex

@article{basting2023scale, title={Scale Learning in Scale-Equivariant Convolutional Networks}, author={Basting, Mark and Bruintjes, Robert-Jan and Wiedemer, Thadd{"a}us and K{"u}mmerer, Matthias and Bethge, Matthias and van Gemert, Jan}, journal={Proceedings Copyright}, volume={567}, pages={574} }