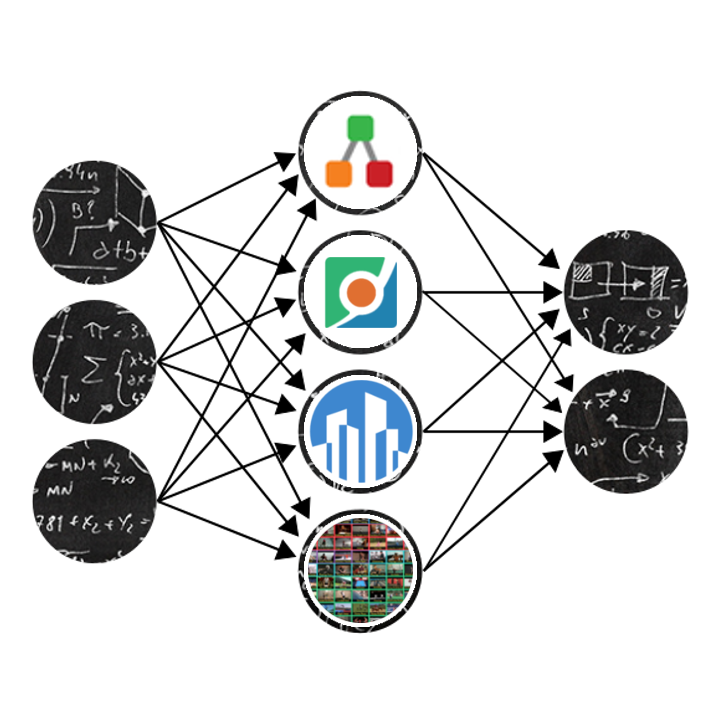

Logo of the 2nd VIPriors competition

Logo of the 2nd VIPriors competition

VIPriors 2: Visual Inductive Priors for Data-Efficient Deep Learning Challenges

Attila Lengyel, Robert-Jan Bruintjes, Marcos Baptista Rios, Osman Semih Kayhan, Davide Zambrano, Nergis Tomen, Jan van GemertArXiv (ArXiv)

The second edition of the “VIPriors: Visual Inductive Priors for Data-Efficient Deep Learning” challenges featured five data-impaired challenges, where models are trained from scratch on a reduced number of training samples for various key computer vision tasks. To encourage new and creative ideas on incorporating relevant inductive biases to improve the data efficiency of deep learning models, we prohibited the use of pre-trained checkpoints and other transfer learning techniques. The provided baselines are outperformed by a large margin in all five challenges, mainly thanks to extensive data augmentation policies, model ensembling, and data efficient network architectures.

Bibtex

@misc{lengyel2022vipriors, title={VIPriors 2: Visual Inductive Priors for Data-Efficient Deep Learning Challenges}, author={Attila Lengyel and Robert-Jan Bruintjes and Marcos Baptista Rios and Osman Semih Kayhan and Davide Zambrano and Nergis Tomen and Jan van Gemert}, year={2022}, eprint={2201.08625}, archivePrefix={arXiv}, primaryClass={cs.CV} }